Our story continues in Erlangen, Germany.

Werner Jacobi was working for Siemens in Erlangen, where in 1949 he filed a patent application for a “semiconductor amplifier.” The semiconductor amplifier was a circuit of five transistors on a single substrate. Effectively, it was the first integrated circuit. However, the semiconductor amplifier remained largely unknown back then and was not put to commercial use.

The Nobel Prize Committee was of the opinion that Jack Kilby invented the first integrated circuit, and so awarded him the Nobel Prize for Physics. That is why he is called the Father of the Microchip – a title that would make anyone envious...

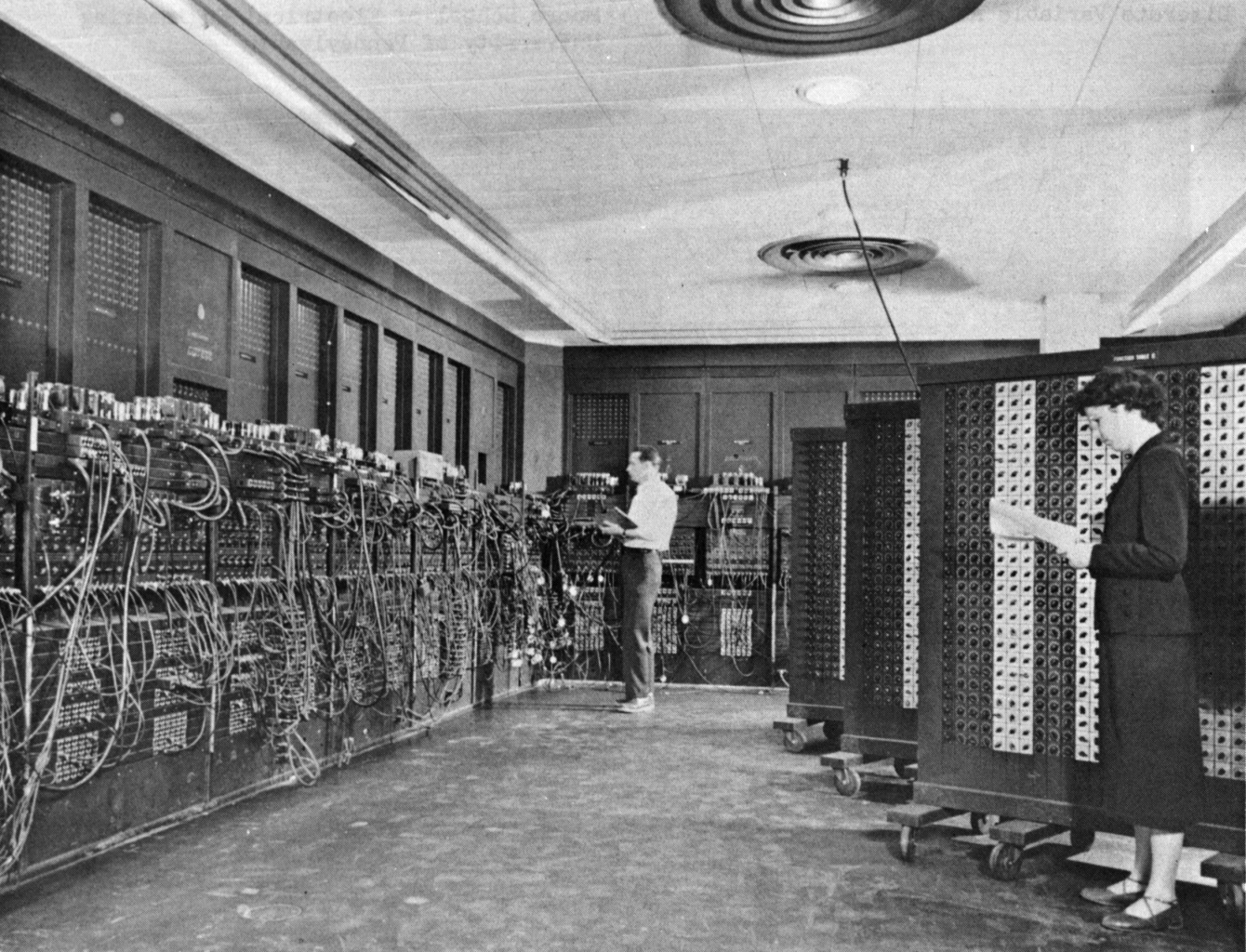

Jack began working at Texas Instruments in 1958. New hires were not given any time off in the summer, and given the scorching temperatures down in Texas, he was probably not delighted about this. On the other hand, at least the labs were empty. “As a new employee, I had no vacation time coming and was left alone,” he said. So he started to experiment. He turned his mind to the “tyranny of numbers”: the problem faced by computer engineers that, as the number of components in computers continued to grow, it was becoming increasingly difficult to wire them all together. Finally, he came to the conclusion that the problem could be solved by integrating various components in a single semiconductor.

On July 24, 1958, he wrote down the crucial idea in his lab notebook: “The following circuit elements could be made on a single slice: resistors, capacitor, distributed capacitor, transistor.” Two months later, the big day arrived: Jack unveiled the first example of a working integrated circuit. There was not much to see – just a piece of germanium with a few wires attached on a piece of glass no larger than a paper clip. Jack pressed the switch, and those present saw a sine wave appear on an oscilloscope. Clearly, it was not much of a spectacle, which may explain why the integrated circuit did not enjoy much success at first.

Jack then applied his invention to the building of pocket calculators. Previous calculators were the size of a typewriter and took much, much longer to carry out calculations.

At the same time as Jack, Robert Noyce was developing his own integrated circuit. Jack and Robert themselves never fought over this, although their companies Texas Instruments and Fairchild Semiconductor certainly did. When Jack received the Nobel Prize in the year 2000, Robert was unfortunately already deceased and therefore ineligible. Nevertheless, Robert held the patent for the first “monolithic” integrated circuit – that is, an IC manufactured from a single monocrystalline substrate.

In 1960, the integrated circuit went into production at Texas Instruments and Fairchild Semiconductor. Back then, the circuits possessed just a few dozen transistors. As the years went on, their complexity would skyrocket.