There is room for AI even in the smallest space

AI, ML - these letters stand for a new era of Artificial Intelligence and Machine Learning and microelectronic systems that enhance many areas and applica-tions ̶ including embedded systems. At the embedded world 2023 in Nurem-berg, AI/ML experts from the Fraunhofer Institute for Integrated Circuits IIS will showcase Edge AI developments from AI integration into hardware up to new ways of AI-centric hardware design from March, 14-16 in Hall 4-422.

Optimal exploration of the design space

Space for extensive analysis and pre-processing in existing hardware and embedded systems is extremely limited. The key question is therefore, how to implement power-ful AI/ML applications under these constraints. The solution is multi-objective design space exploration to suit both, the restricted hardware space and the application. To this end, experts at the Fraunhofer Institute for Integrated Circuits IIS develop auto-matic exploration and optimization techniques using reinforcement learning and ge-netic algorithms to quickly explore a wide variety of solutions including classical ma-chine learning pipelines and efficiently compressed deep learning models.

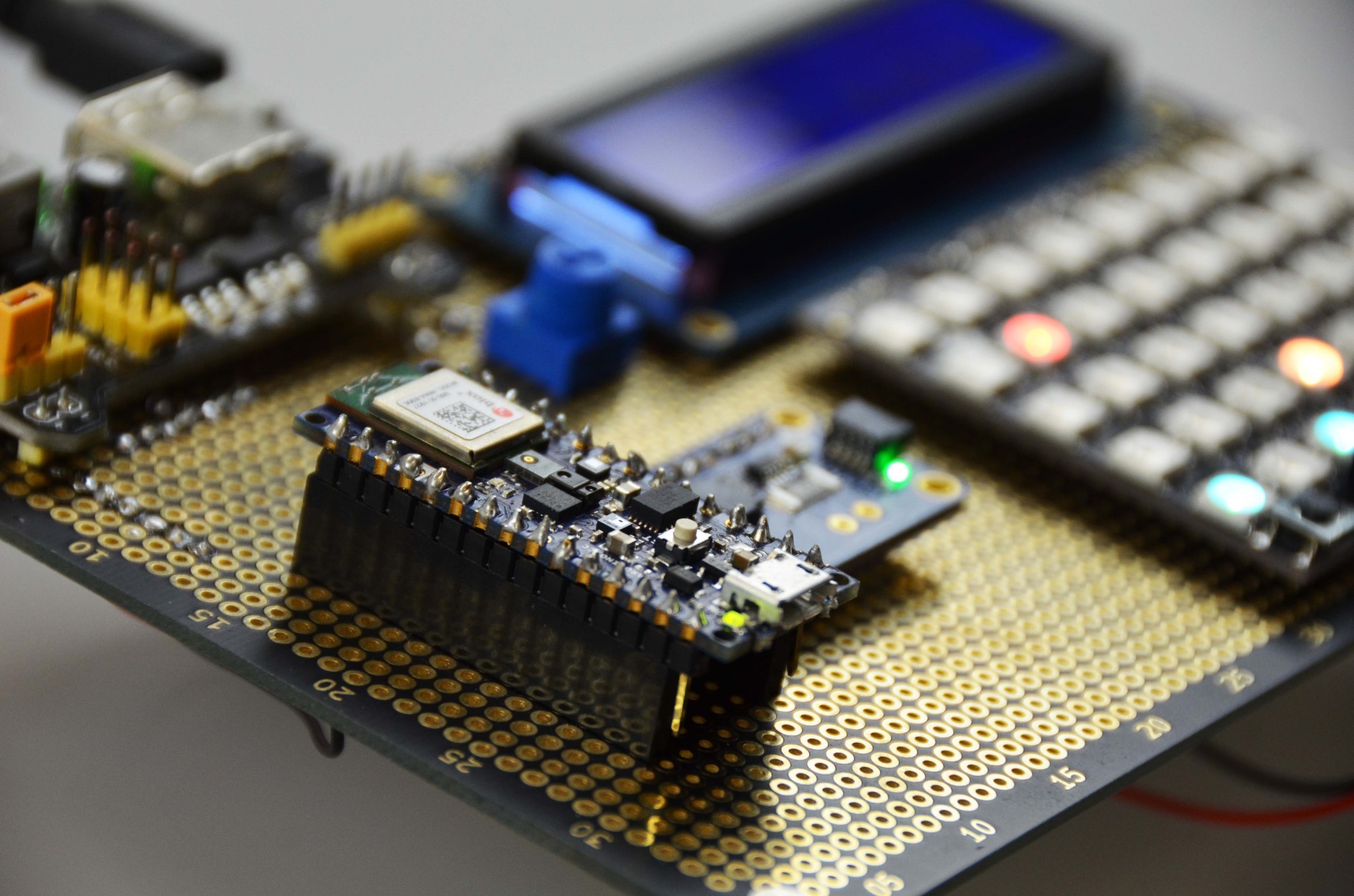

“With embeddif.[ai] Fraunhofer IIS offers rapid development of AI solutions for various embedded hardware systems,“ explains Nicolas Witt, group manager Machine Learn-ing & Validation. Customers can run AI applications energy-efficiently on site, inde-pendent of a cloud server. These advantages enable companies to use artificial intelli-gence in the smallest possible space for their applications.

As close as possible to the action

Using AI and ML is a hard-to-beat plus, especially for embedded sensors and systems, to ensure the efficiency of fast data analysis as close as possible to data acquisition and any actuator technology. In addition there are short distances and thus low de-lays, no additional analysis hardware or complex cloud connectivity, which are at the expense of energy consumption, sustainability and efficiency. When selecting and applying AI pipelines, scientists focus on methods that are implemented as fast and energy-efficiently as possible directly into commercial microcontrollers, FPGAs or graphics cards and make best use of the limited space available. With this level of customized adaptation AI can be integrated in existing hardware right down to the sensor, the camera or connected embedded modules. Fraunhofer IIS offers “Joint Labs” to realize customers edge AI applications together with extensive know-how transfer. Furthermore, embedded AI development frameworks serve as a quick start for system programmers and can be adapted to their applications, including training and support.

When AI moves right into the chip

In addition to crafting and adapting AI methods for existing hardware, the scientists at Fraunhofer IIS focus on neuromorphic computing and a chip architecture that is based on the principle of biological neural networks. Spiking neural networks (SNNs) are one example. “Basically, SNNs function similarly to a classic neural network. They send information in the form of short pulses,” says Johannes Leugering, Chief Scientist for embedded AI. “The trick is to send a very small amount of information only occasionally, just when something interesting has happened. In this way, we reduce the amount of data and, in turn, energy consumption.” Fraunhofer IIS develops both, spiking and convolutional neural networks in qualified mixed-signal CMOS technologies, which are scalable and configurable for a wide range of applications. The required software tools for training networks as well as the transfer of the networks to the hardware are developed in tandem. The resulting solutions are able to offer more parallel processing and less on-chip data transfer than comparable systems based on the von Neumann architecture.

More information can be found here.