Today, hardly any area of life can be imagined without AI. Well-known method fields in AI are unsupervised and supervised learning, which predominantly deal with static problems. Reinforcement learning (RL), on the other hand, is an area of Machine Learning, which deals with learning optimal behavior in dynamic environments. Reinforcement learning is found in a growing number of applications. These include autonomous vehicles and drones, intelligent home control or control of production plants and logistics fleets. Likewise, the behavior of robots or prostheses can be learned with RL. But also AI stock market agents or recommendation systems for movies and music are based on RL. In addition, there are virtual assistants, e.g. for e-mails and appointments. Also the adaptation of large language models like ChatGPT is done with RL with human feedback.

Reinforcement Learning for Industrial Applications

Reinforcement Learning

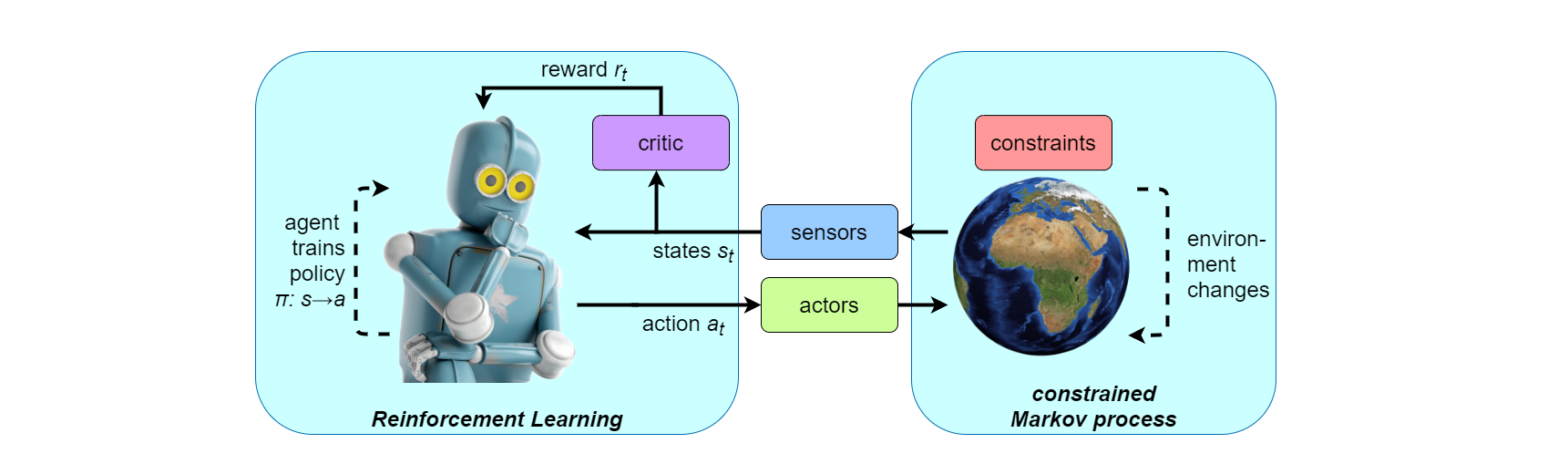

In reinforcement learning, an AI-agent interacts with its environment and explores it through trial and error. It learns rules that describe how actions and decisions are made. It is guided by a reward, which allows the learned behavior to be shaped through targeted constraints and adaptation of the reward. The policy learned by the AI-agent therefore derives from training data, representation of the state of the environment, and the reward. This promotes safety as well as efficiency and allows behavior to be adapted to multiple desired goals, tailoring it for the application.

Applications

Applications of reinforcement learning are mainly found in industrial environments, such as chemical processes or manufacturing. The focus is on the optimal control and regulation of machinery as well as their planning. Our competencies can be grouped into the following application topics.

Reinforcement learning for safe regulation / control

Reinforcement learning finds optimal behavior in dynamic environments. Thus, optimal control or regulation can be trained with AI. Once AI finished training, it can then be fixed and tested for safety. In this way, an efficient and lean control component can be inserted into the machinery. In addition, a classic, decision tree-based control system can also be created with the AI via imitation learning. This can then be verified propositionally.

Reinforcement learning for machineryplanning and optimization

Process optimization with AI and especially reinforcement learning is very powerful. AI can take many requirements into account at the same time. For example, in a mechanical or chemical production, an overall cost-optimal and at the same time sustainable adjustment of all machinery components can take place. Likewise, transport routes, for example, can be optimized. Another particular strength of RL is its dynamic reaction to changes, which allows the AI to adapt quickly to changes in raw material prices, qualities and availability and find an optimal configuration.

Our offer

Workshops

We would be happy to work with you to determine how reinforcement learning can optimize processes in your company and reduce the workload of your specialists. Interested? Feel free to contact us!

Research collaboration

At the Friedrich-Alexander-Universität Erlangen-Nürnberg (FAU), Fraunhofer IIS offers lectures on (Deep) Reinforcement Learning as well as various seminars on Machine Learning.

Seminars

Fraunhofer IIS is providing online seminars in machine learning and reinforcement learning.