Machine Learning in Human Machine Interfaces

Goal

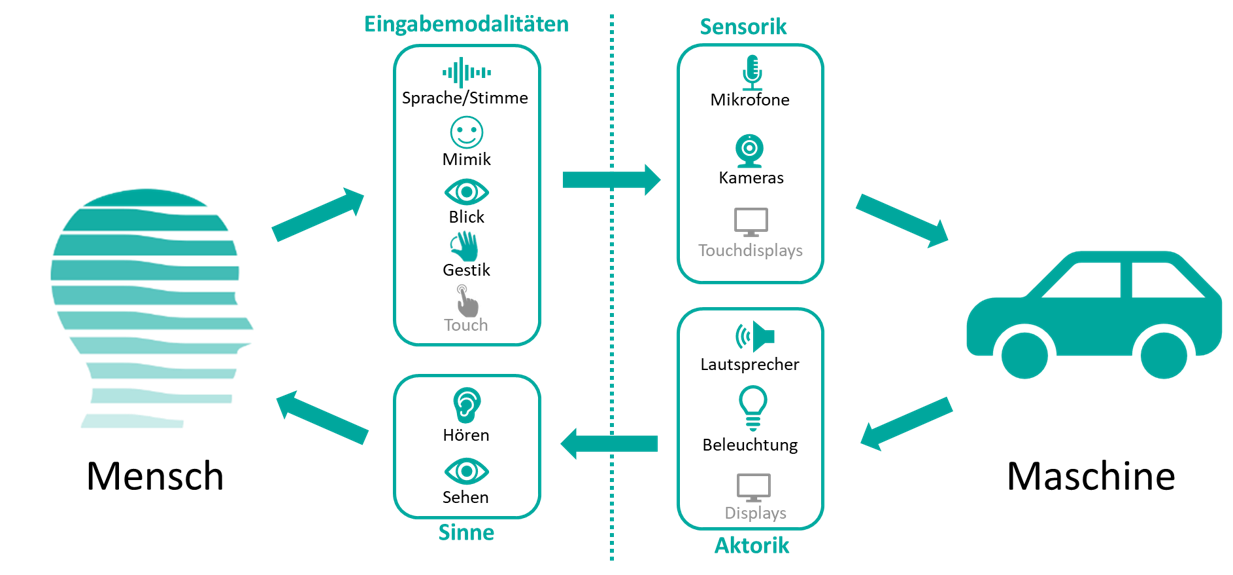

Development of a self-supporting natural human-machine interface for automated driving using multimodal input and output modes including facial expressions, gestures, gaze, and speech.

In conjunction with considerations relating to the immediate environment (vehicle interior, etc.), the result is a holistic development approach for a human-machine interface (HMI) tailored to the human senses, based on machine learning approaches.

The system facilitates interaction while enhancing user experience and acceptance for automonous driving in all areas. The methods to measure user satisfaction developed in the course of the project will form the basis for other projects with a similar design.