Transparent AI Decisions in Medical Technology

Objective

The aim of the BMBF’s TraMeExCo (Transparent Medical Expert Companion) project is to research and develop suitable new methods for robust and explainable AI (XAI) in complementary applications (digital pathology, pain analysis, cardiology) in the field of medical technology. The proposed system is to help doctors and clinical staff make diagnoses and treatment decisions.

Partners

University of Bamberg | Professorship for Cognitive Systems

- The University of Bamberg focuses its activities in this area on the conception, implementation and testing of methods to explain diagnostic system decisions using local interpretable model-agnostic explanations (LIME), layer-wise relevance propagation (LRP) and inductive logic programming (ILP).

Fraunhofer HHI | Department of Video Communication and Applications

- Fraunhofer HHI is further refining layer-wise relevance propagation (LRP) approaches.

Fraunhofer IIS | Smart Sensing and Electronics

- The Digital Health Systems business unit investigates and implements the few-shot learning and heat map approaches for digital pathology. Long short-term neural networks help to determine heart rate variability in noisy EKG and PEG data.

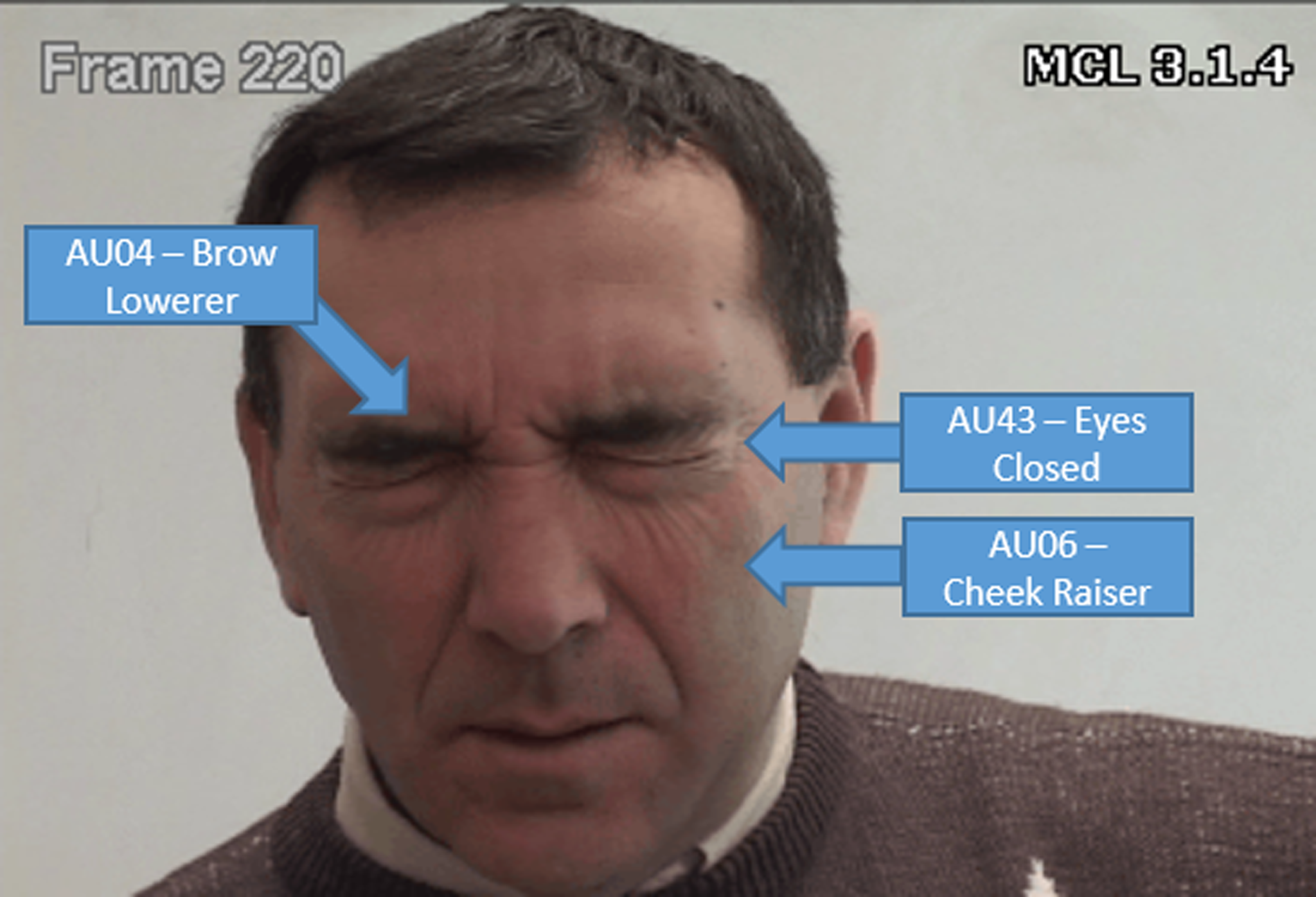

- The Facial Analysis Solutions business unit is collaborating with the University of Bamberg to research Bayesian deep learning methods using pain videos. Researchers make use of the Facial Action Coding System (FACS) as adopted by Ekman and Friesen, in which every movement of individual muscles (action unit), such as the contraction of the eyebrows, is described, interpreted and detected. The presence of certain action units, automatically detected, indicates pain.